Public Policy Sessions 2024 (summary)

On May 15th, ISOC Switzerland Chapter hosted the Public Policy Sessions 2024 including a diverse set of introductory talks and a very interesting panel on the topic of disinformation online, organized by Bernie Hoeneisen, co-founder of ISOC-CH.

You can watch the recorded live stream here: https://livestream.com/internetsociety/isoc-ch-public-policy2024

First, Markus Kummer (ISOC-CH Chapter Advisory Council Representative) introduced the main objectives and history of the Internet Society, summarized with the phrase “We cannot take the Internet for granted, but we need to fight for it.” For the ISOC Switzerland Chapter, Markus stressed that in Switzerland accessibility, one of the main ISOC’s objectives is not an issue, but there are important policy and regulation aspects for which ISOC-CH contributes in collaboration with other local organizations like the Pirate Party who had a strong presence in the conference.

Monica Amgwerd (general secretary of the Pirate Party Switzerland) presented the Digital Integrity Cantonal Initiative, launched in March 2024. The main request of this initiative is to consider digital integrity regarding our private data, as a fundamental human right, as an analogue to the physical integrity, including six rights: the 1) right to be forgotten, 2) right to an offline life, 3) right to information security, 4) right not to be judged by a machine, 5) right not to be surveilled, measured and analyzed, and 6) right to protection from the use of our data without consent. Some of these rights are already covered by special legislation, but treating them as fundamental rights can bring awareness and stronger legal protection. Integrating the digital integrity into the Cantonal Federal Constitution in Zurich is considered as a first step towards moving to the National level.

With an overwhelming number of 9,841 signatures, the initiative was submitted to the Canton of Zurich on August 21, 2024. The canton will now work with the initiative committee to draft the text of the law. A vote is expected in the next few years. More info at https://digitale-integritaet.ch/

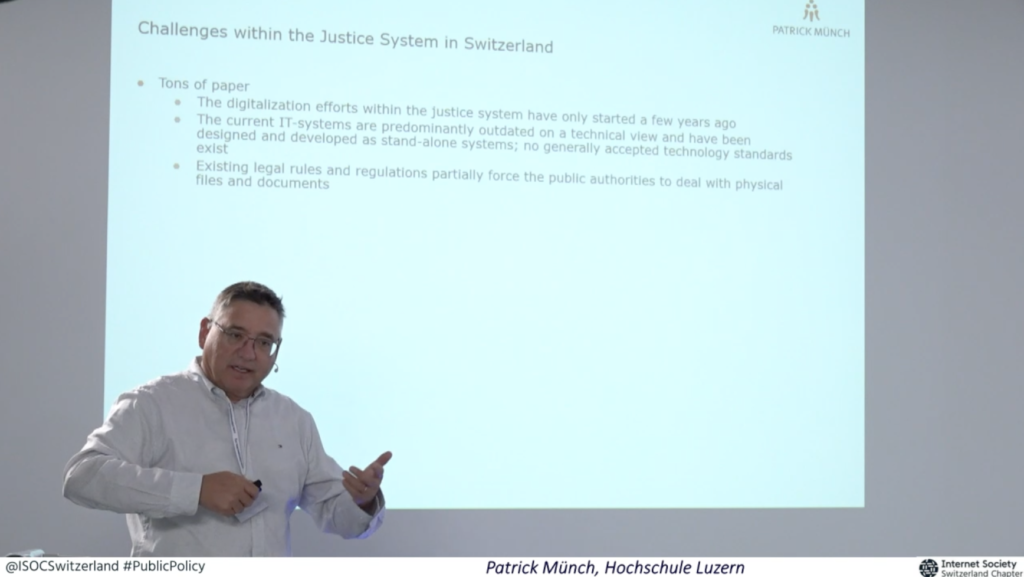

Taking a different legal perspective, Patrick Münch (Hochschule Luzern) was introduced by Marianthe Stavridou (ISOC-CH Vice-chair) and presented very recent developments regarding the Digitalisation in Swiss Justice, and more specifically the Justicia 4.0 programme https://www.justitia40.ch/, part of which is the Justicia Swiss platform, https://justicia.swiss, which aims to implement secure digital communications between lawyers and the justice system, eventually the only communication medium replacing paper, hopefully in the future. It is important that the whole platform is open source, “public money, public code” and that is was designed to minimize as much as possible the privacy issues. As Patrick stressed, the platform fulfils all 6 digital integrity rights, as presented by the Pirate Party.

After the introductory talks, a dedicated panel on “Combating disinformation and its conflict with human rights” followed by short position statements by all participants.

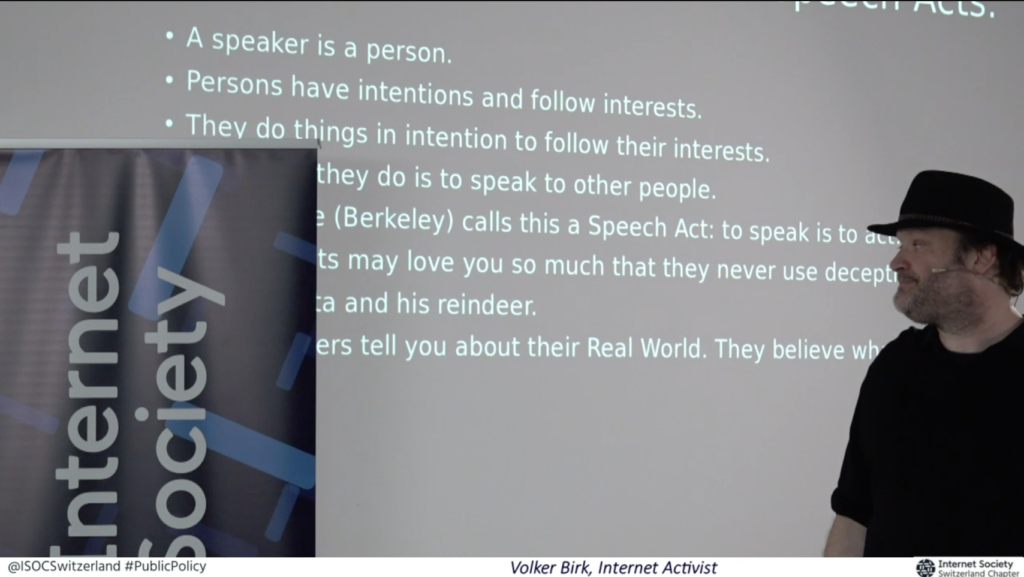

Volker Birk, Internet activist, warned us that “real world understanding comes mostly from communication instead of perception or evidence” and politicians’ job in a democracy is a competitive business to convince people for things that are not necessarily true. A reference to Saul Kripke’s “possible worlds”, reminds us that things exists only in our minds, and for this “Be careful when you believe what you think”.

Elif Askin, University of Zurich, provided an in-depth introduction to the legal framework in Switzerland regarding the governance of disinformation and its relationship to European regulations. She made a distinction between unlawful vs. lawful content and the creator, disseminator, recipient of disinformation, stressing that in Switzerland there is no specific legislation, which protects the freedom of expression even for untrue factual claims. Special legal provisions provide exceptions that protect the individual, democratic decision-making, election and voting, public health, public order, and public safety. A very important point in relation to human rights is that regulatory interventions by the state can take place only in cases of power imbalance between individuals and companies, like the social media platforms, which are not bound by human rights obligations, which are directed only to states. Still the law leaves many open questions regarding the responsibility of social media platforms, and the human rights can provide only a supportive framework for more special legislation needed, like the European Digital Services Act (DSA).

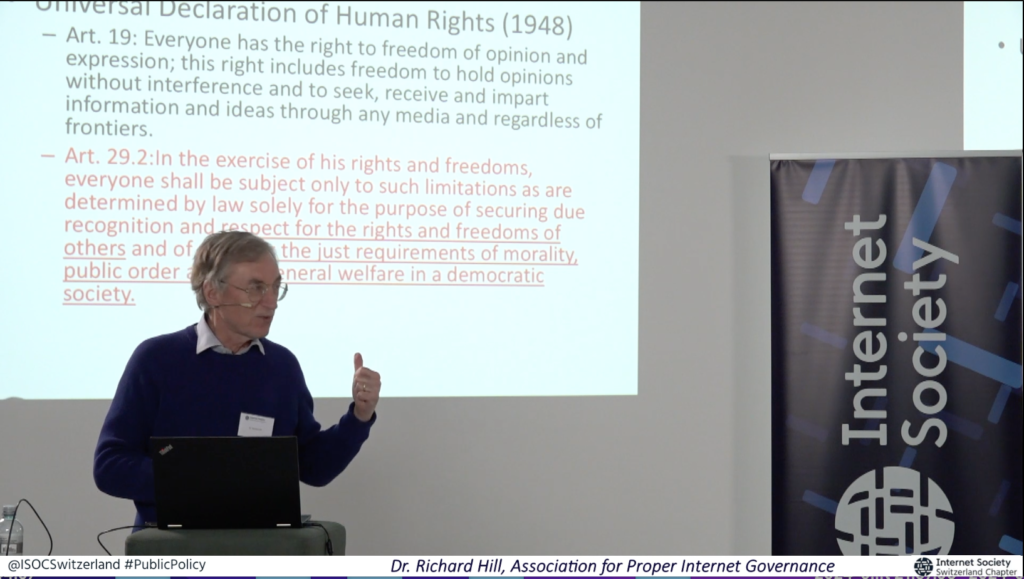

Richard Hill, Association for Proper Internet Governance, offered a lively history of disinformation stressing that freedom of speech is a relatively recent development in human history, and extreme examples of censorship are not so old, like the Index Librorum Prohibitorum which existed until 1966. The French declaration of human rights (1789) and the US First Amendment (1791) mark the historic shift towards freedom of speech, followed by international laws at the end of the 19th century, like the Internet Telegraph Convention (1865). But still, publishers had always, and still have, the power to decide what is published or not, and still today platforms like Twitter and Facebook, can decide what is disinformation, and make it possible to states and other actors to outsource censorship to them, while at the same time they are not liable for harmful disinformation, choosing not to be a publisher in legal terms, but an intermediary / transporter, who has no liability. Finally, copyright is another restriction on free speech, which does not concern harmful or untrue content, but just illegal.

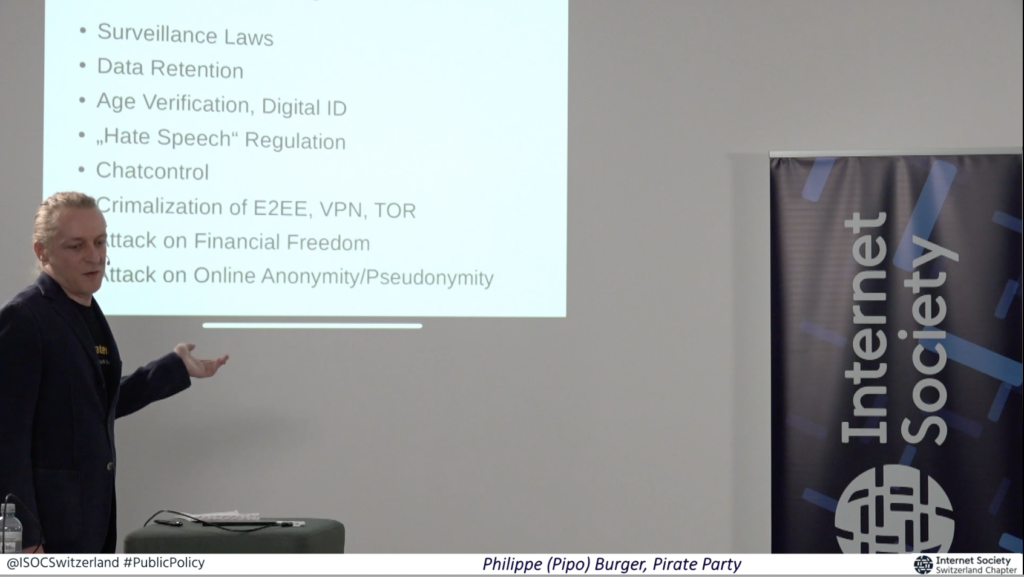

Phlippe (Pipo) Burger, Swiss pirate party, offered a more technical perspective on the “dark side of fighting disinformation”, with very interesting examples from the Swiss experience like the hosting of Wikileaks on Swiss servers and surveillance-friendly laws that cause a “chilling effect” and help to control the flow of information. One of the biggest technical challenges would be to control the power of artificial intelligence, like the deep fakes that can significantly harm the trustworthiness of information online, which is already questionable and even established media platforms like the NYT have been proven to disseminate false information. Moreover, depending on machines to manage reports for false information, can make things even worse with examples of people trying to help being punished by mistaked algorithms. In this environment, the power of platforms to censor even truthful information like the Hunter Binden Laptop controversy or the Lableak controversy add one more layer of distrust. And the situation is not improving when those claiming to fight disinformation like the “Global Engagement Center”, are backed up by army organizations and put surveillance at the forefront of their efforts, while leaving the definition of the term itself rather vague.

The following panel summarized the most important point and put the focus on possible forms of practical solutions like the need of regulation for interoperability between platforms, the important role of education and local organization of democratic processes with low-tech solutions, and most importantly the personal responsibility regarding the sharing of our data with AI bots and cloud platforms.

However, the fact that disinformation is now cheaper and open to everyone makes things more complex than in the past and an example discussed in depth was the possibility to implement

watermarking for AI, in order to know at least that certain content is AI-generated. The situation is complex because even if a sound technical solution was in place, it can never be impossible to circumvent and then non-watermarked content would be even more trusted, while at least today there is always a doubt.

ISOC-CH role is to keep such discussions open and create bridges between the Swiss and international contexts through the wide network of the ISOC community, and recently a new Horizon Europe project, called NGI0 Commons Fund, which supports financing for open source projects, which can be part of the solution to the complex world of disinformation online.